Since 1946, which marked the development of the first electronic computer namely the ENIAC (Electronic Numerical Integrator and Calculator), almost each decade has seen significant advances in computer technology. The term "Computer generation" is used to denote the technology that emerged during a particular period with considerable improvement in hardware, software and computing concepts. Thus each decade since 1946 gave rise to a new generation of computers.

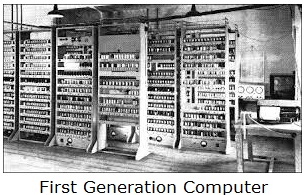

The First Generation Computers (1946 - 55)

ENIAC was the first electronic general purpose computer to be put to use. It was developed by a team led by professor Eckert and John.W. Mauchly at the university of Pennsylvania in U.S.A. It had a very small memory and was used for preparation of artillery trajectory tables.

The first generation computers used vaccum tubes and machine language was used for giving instructions. They used the stored program concept proposed by John Von Neumann. This concept is storing data and instructions in the computer memory. Initial applications of computers were in science and engineering. The concept of operating system had not emerged during the first generation. In order to use the computer, one had to know the details of its structure and working.

Because vacuum tubes were used, computers were huge and also required more power. The failure rate was high. Vacuum tubes also generated a lot of heat and could not operate for long without cooling.

The ENIAC itself used around 18000 vacuum tubes and weighed 30 tons.

In 1950, the first computer that used the Von-Neumann concept of stored programs named EDVAC (Electronic Discrete Variable Automatic Computer) was built. With this, operation of the computer became faster.

The EDSAC - short for Electronic Delay Storage Automatic Computer was developed by Professor Wilkes at Cambridge University in 1949. This also used the stored - program concept.

The UNIVAC - I (Universal Automatic Computer) became operational in 1954. This marked the beginning of commercial production of stored program computers. With the advent of UNIVAC - I, computers began to be used for commercial applications. In the period from 1954 to 59, many businesses acquired computers for data processing applications.

The Second Generation Computers (1956 - 65)

The computers of the second generation began to appear in the later half of the 1950's. They were made smaller and faster and had greater computing capacity. The practice of writing programs in machine language was replaced by higher-level programming languages. The vacuum tubes were replaced by tiny solid state components called transistors.

The invention of transistor by John Bardeen and William Shockley in 1947 was a big revolution. Transistors were highly reliable and required less power. Also they were faster than vacuum tubes. The invention of magnetic core memory was another important event that happened during the second generation period. These are tiny ferrite rings that can be magnetized in either direction for representing 1 and 0 magnetic cores were used to construct large random access memories. Memory capacity in the second generation was about 100 kilo bytes.

The higher reliability and large memory capacity of computer led to the development of high level programming languages (HLLs). Languages like FORTRAN, COBOL and ALGOL were developed during this generation.

Magnetic disk and tape storage medium began to be used. Batch processing operating systems were developed, which automated the operations of the computer.

Commercial applications rapidly developed during this period and dominated computer use by the mid 1960s.

Some of the second generation computer were IBM 1401, IBM 1620, IBM 7094, RCA 501, CDC 3600 etc.

The Third Generation Computer (1966 - 75)

The third generation computers replaced transistors with 'Integrated Circuits' or ICs. The Integrated Circuit was invented by jack Kilby at Texas Instruments Inc. in 1958. An Integrated circuit consists of electronic circuits fabricated on a single chip of a semiconductor material called silicon. All electronic components like transistors, resistors and capacitors were fabricated on the silicon chip (also called silicon wafer). From small scale integrated circuits which had about 10 transistors per chip, technology developed to medium scale integrated circuits with 100 transistors per chip. The size of main memories reached about 4 mega bytes. Magnetic disk storage capacities increased to around 100 MB CPUs became more powerful.

Time sharing operating systems, that allowed multiple uses to work simultaneously with a computer became popular. Computer began to be used increasingly for many on-line applications. Other application areas were production and manufacturing, inventory control, air-line reservation systems etc.

The system 360 family of mainframe computers was announced by the IBM. Other models were ICL- 1900 series, IBM - 370, the DEC PDP 11 etc.

The Fourth Generation Computers (1976 onwards)

The development of VLSI (Very Large Scale Integrated Circuit) technology led to the creation of microprocessor chips. These chips packaged a million transistors in one chip. The size of the main memory also increased tremendously. The microprocessor marked the beginning of a new generation of computer- the fourth generation. Powerful personal computers and mini computers emerged. In 1976 Apple computers produced their first PC named Apple-I. By the end of 1977, machines like Apple II and TRS-80 model from Tandy corporation were popular micro computers.

Two companies Intel and Motorola began producing new micro-processors. In the early 1980s, many microcomputers were built around these processors. These computers used much more powerful operating systems. In 1981, the IBM announced its first desktop model microcomputer or the PC (Personal Computer). This became the de-facto industry standard for PC's. The IBM-PC dominated the world market. However there was a close competitor to IBM - the Apple computer.

Whereas IBM-PCs made use of one of the microprocessors from the Intel family, Apple-PCs used Motorola processors.

The second decade of the fourth generation (1986 to present) brought great increase in the operating speed and capability of microprocessors and the size of main memory. Both main memory and hard disk capacities went up several times each year. Microprocessors have undergone major architectural changes.

Powerful and versatile operating systems were developed which exploited new features of the processors. Advanced programming languages were developed along with new programming concepts like object oriented programming (OOPs).

The Fifth Generation Computers (Present and Beyond)

The development of ULSI (Ultra Large Scale Integration) microprocessor marks the beginning of Fifth Generation Computers. The super human thinking computers found in science fictions don't exist. But science fictions have become reality. Scientists are now trying to develop computers that can solve unstructured problems. These are the types of problems that people solve by trying various alternatives and learning from their mistakes. Until recently, only Human Intelligence could do this. Efforts to design computer systems that exhibit human intelligence are classified under Artificial Intelligence (AI). Researchers are trying to develop neural - network computers - machines with circuits - patterned after the complex interconnections that exist between the neurons in the brain.

The central concept of fifth generation computers is Artificial Intelligence. Researchers are working to develop computers that have intelligence close to human beings. But this field is still in fancy. Artificial Intelligence based systems and expert systems (or knowledge- based systems) are capable of gaining knowledge from surroundings and react to events depending on situations. Already, robots have been developed and deployed in many applications. Robots that understand the verbal commands of their master have become a reality. The use of superconductors makes artificial intelligence a reality. Innovations in Quantum computation, molecular technology and nanotechnology are the future developments of computers.

Examples of artificial intelligence are

(1) Medical diagnostic packages that diagnose diseases and recommend treatments

(2) Geology packages that can predict mineral deposits

(3) Natural language programs that allow users to replace complicated computer commands with plain natural language commands.

(4) Voice recognition that is being used today.